It is probably obvious from the postings I’ve made here at BraindeadProjects that my home is nothing more than a giant networking lab. When I wanted to learn how GPON worked, I prepped my “lab” by building a 12 strand fiber-optic ring through the walls of my home and connecting the five Cisco switches throughout the house together using bi-directional SFPs

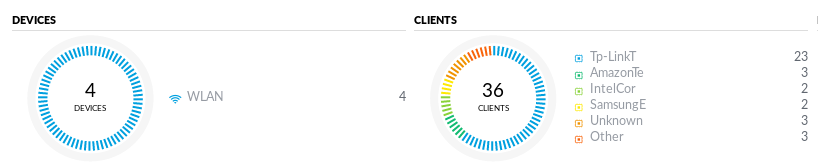

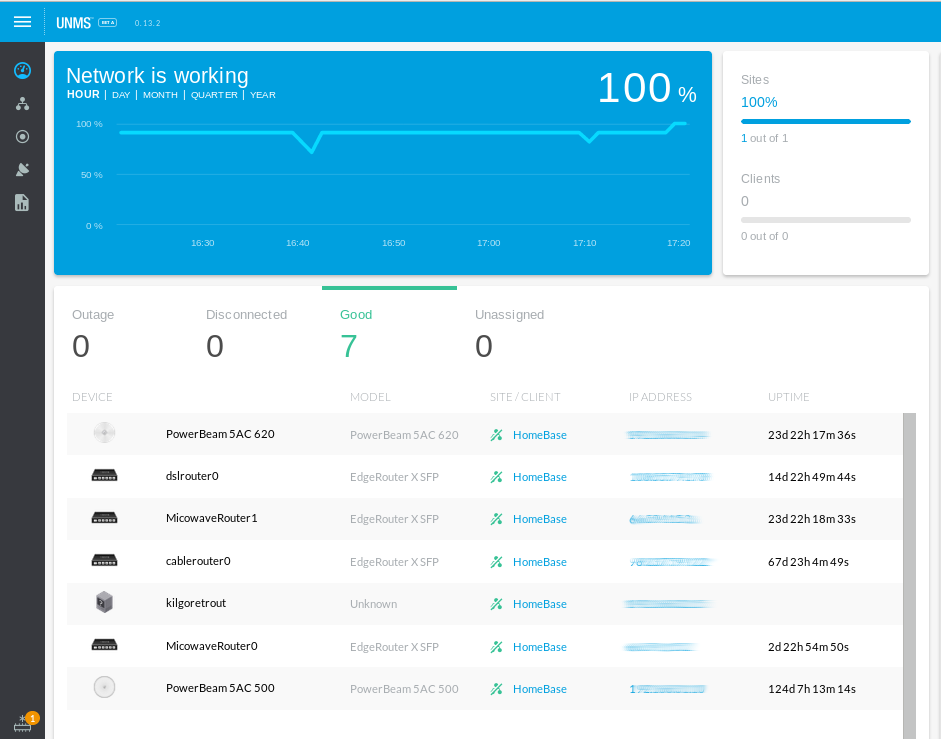

When I needed better wireless coverage, I built out a Ubiquiti Unifi wireless network and later rewired most of the light switches in my home with Wifi-enabled TP-Link switches so that I could voice control the home using Amazon Alexa Echo’s.

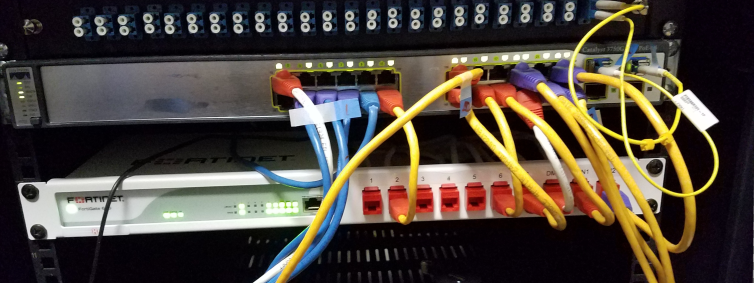

Wanting to centralize my firewall policies, long ago I routed each of the 12 production VLANs at home run through a Fortigate 60C High Availability cluster.

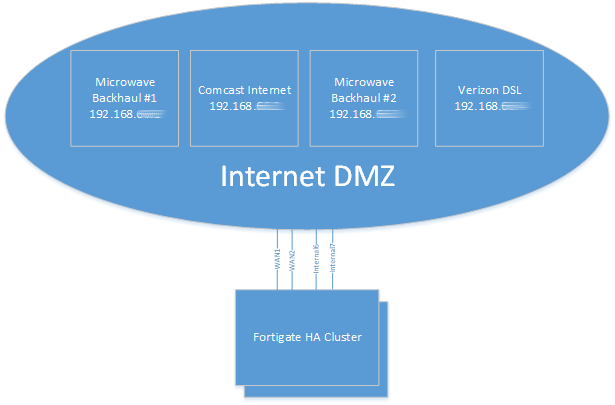

The home has 4 Internet connections with 2 diverse paths: The 3rd floor terminates two 5Ghz microwave PtMP links from a Wireless ISP that I used to work for. The basement terminates a Verizon 5Mbps/760Kbps DSL line, and a Comcast 100Mbps cable link.

So how do I maintain connectivity to the Internet if a connection goes down or if I lose power on a floor of my home? Previously I had a simple VRRP setup: Whichever connection was performing best I would manually set to be the VRRP master and fail over if connectivity went down. If I wanted to specify that email should operate over the microwave backhauls, I would create another VRRP group (so that I could have redundancy), policy-based route email traffic to that group, and setup an IP SLA to test the connection. This was a bit of an administrative nightmare, so I did so sparingly.

Then the world became abuzz with “Software Defined Wide Area Networking“. To qualify as “SD-WAN” Gartner has four required characteristics: The ability to support multiple connection types (MPLS, LTE, Internet, etc), support for dynamic path selection, load sharing over the links, and simplified provisioning (Zero Touch Provisioning).

I’ve had the opportunity to evaluate a small handful of “SD-WAN” solutions, each with their own pros and cons: Some are surprisingly lacking in features (despite large sales footprints), some are full of features but have lackluster provisioning, and some are insanely expensive (at least for home use).

Initially I had settled on adding a different vendor’s SD-WAN appliance into the home network and purchased 3 of their devices. After waiting for the shipment for over a month, I received a full refund from the seller with little explanation. I seriously lucked out.

While waiting for my boxes to arrive, I had the chance to borrow and test the platform and found some limitations – namely only support for 2 WAN connections and no active-active support (so I couldn’t use my other 2 WAN connections) . Then I took a closer look at the Fortigate’s I already had in my network.

Fortigate supports re-configuring each of their 10 ethernet connections for various use. This allowed me to take ports that are typically used for LAN connections and re-purpose them into WAN connections. This is a major plus. The downside was my exisiting Fortigate 60C’s don’t support the lastest FortiOS (6.0) code.

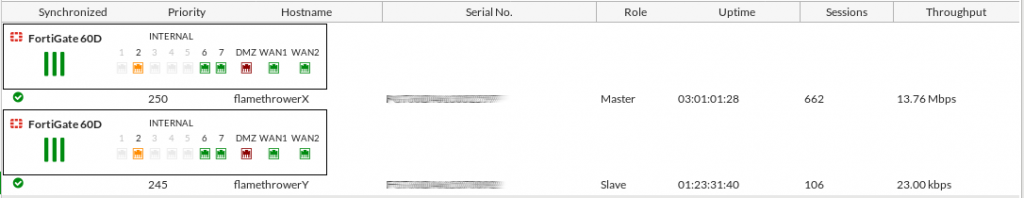

For the price of the other vendor’s limited platform (x3), I could purchase 2 used Fortigate 60D’s off Ebay – plus purchase rack-mount trays for each unit. No more Fortigate sitting atop another device in the network racks. Since I don’t need the advanced features the platform provides (anti-virus, IPS/IDS, etc), the second-hand solution is perfect for my needs (Firewall policies, SD-WAN, VPNs).

So here’s how Fortinet does things:

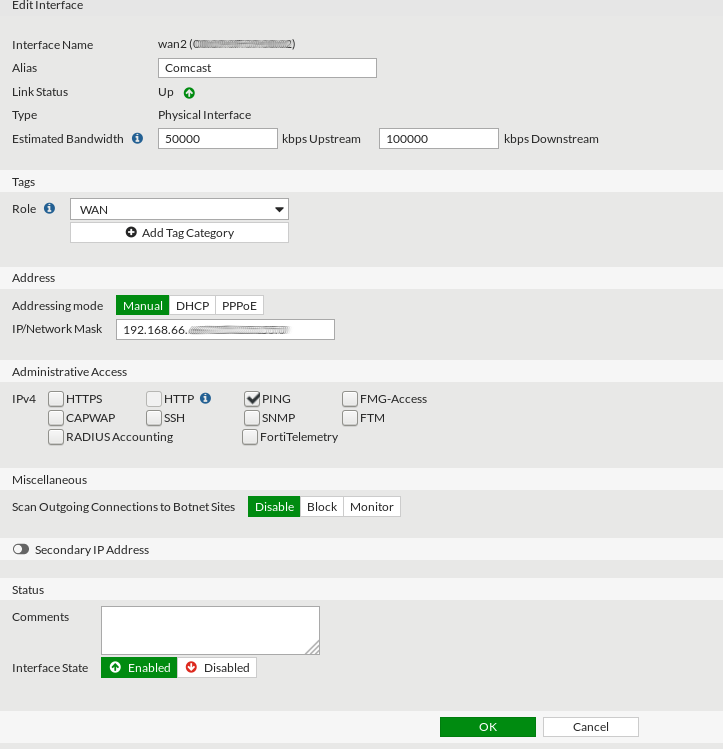

Configure an IP on each of the WAN connections you intend to use. In my instance, VLAN 66 is my “Internet DMZ” where each of the 4 Ubiquiti EdgeRouter X SFPs bring the Internet connections into my network.

To allow the Fortigate to have multiple WAN interfaces in the same subnet, you have to override the system default preventing that:

flamethrowerX # show system settings

config system settings

set inspection-mode flow

set allow-subnet-overlap enable

set gui-fortiextender-controller enable

end

When creating the WAN interfaces, you’ll need to manually specify the bandwidth of each link. This is one unfortunate downside to the Fortigate solution – it cannot measure available bandwidth dynamically.

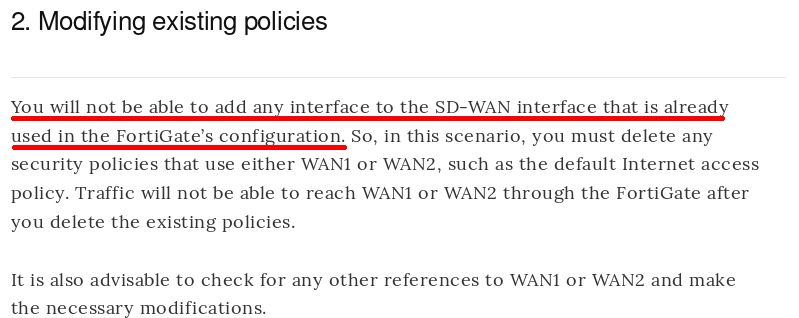

When selecting the members of the “SD-WAN” interface, you may find that you’re unable to include certain interfaces. The most likely cause of this is a firewall policy referencing that interface. If you don’t follow the cookbook, you’ll likely run into this frustrating problem, so RTFM.

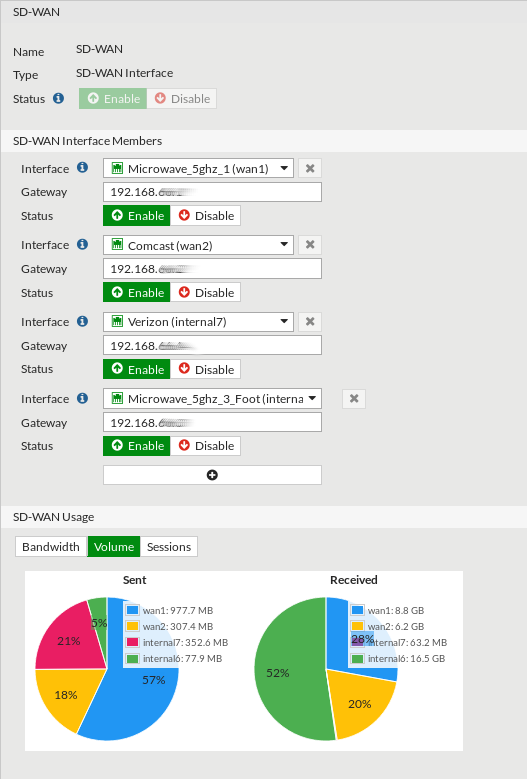

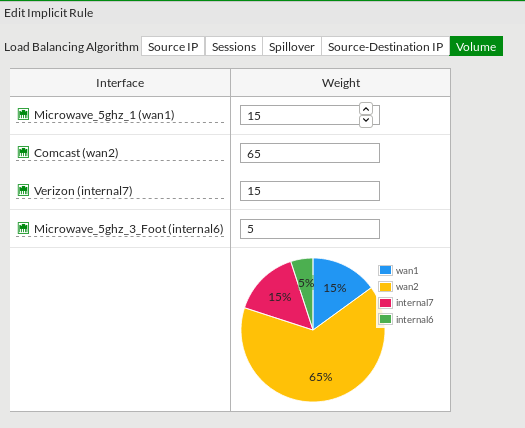

When you aggregate interfaces into the SD-WAN interface, you’ll need to specify the gateway of each WAN link and the default load-balancing mechanism. In my instance I’m using “Volume-based” balancing.

Under the SD-WAN rules section you can further specify how you want the volume dispersed.

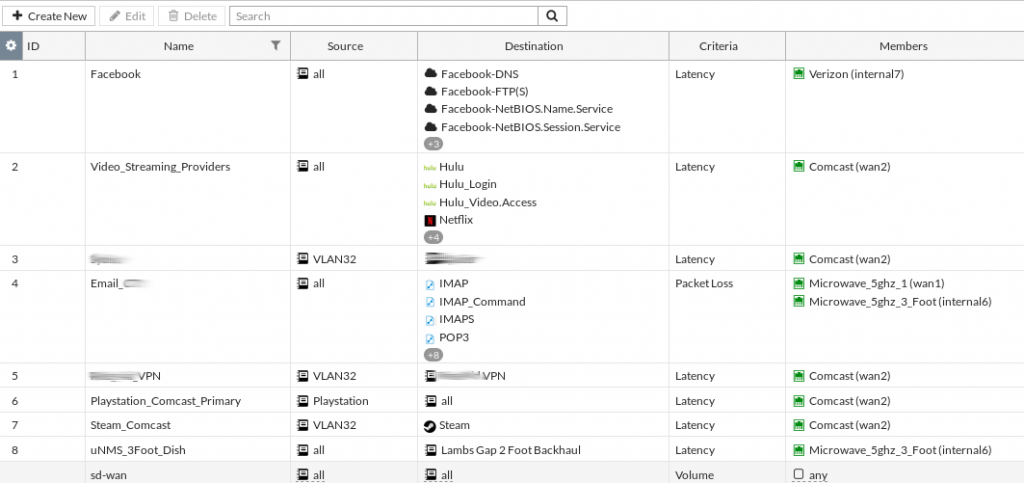

After creating the base settings you can have the real fun. The PBR rules that used to take additional thought and design are now the matter of a point and click solution. Making email route over the 5Ghz links by default is the simple matter of creating an SD-WAN rule. Video streaming services such as NetFlix and Hulu can simply be prioritized to run over the higher bandwidth cable connection – and failover to the other options when needed.

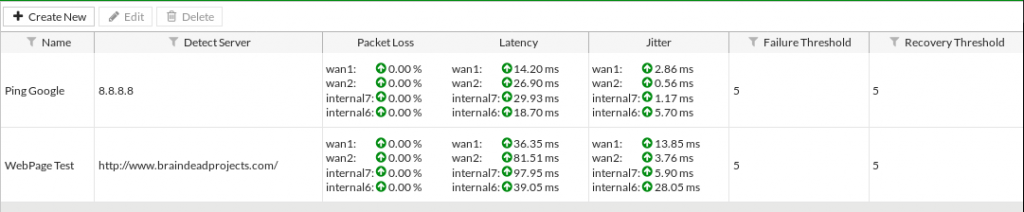

The SD-WAN SLA’s are somewhat simplistic. You have the option to either ping or pull a web request from a designated server. Neither solution detects MTU issues in a path. If I were to disable TCP MSS clamping on my DSL line the system continues to use it despite a user being unable to download content from websites correctly.

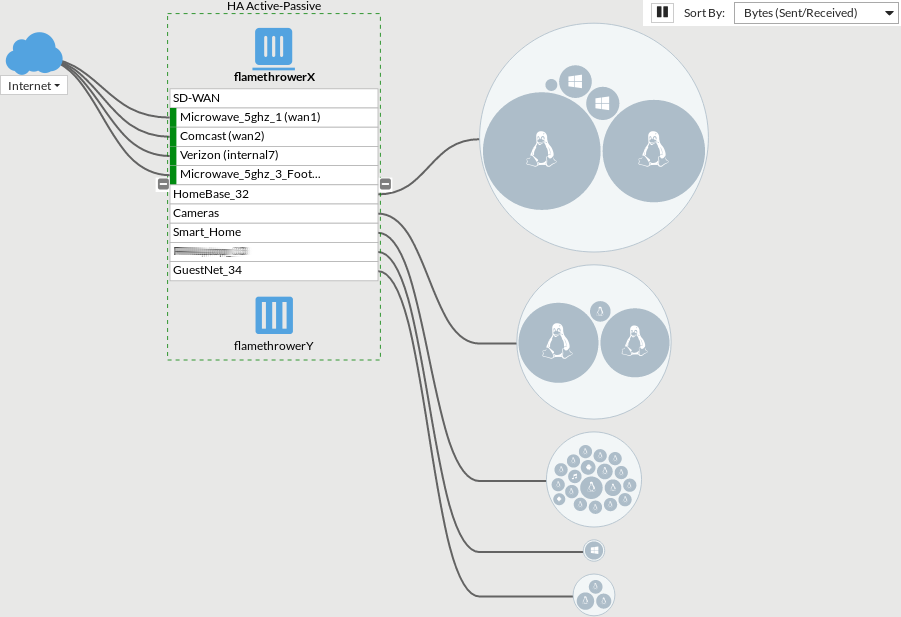

One of my favorite features in the web interface is the ability to look at the logical topology and see which users in each VLAN are consuming what amount of traffic.

You can also drill into the flows determining which flow is using which WAN link.

So, what do I not like about the solution? I’m able to rename an interface, but on some screens the GUI displays the interface name and NOT the alias. This requires additional thought “Oh, interface7 is the DSL”.

I also wish I had the ability in each flow to see which SD-WAN rule was hit. This is important since it can help you verify that things like Email are classified correctly (I found that IMAP wasn’t considered part of the “All Email” out-of-the box classification in the non-Fortinet solution I initially purchased).

I’m still working to perfect the HA failover on the system. The general idea is that if the one Fortigate can’t ping the VRRP addresses I had setup on the WAN routers or LAN switches the backup unit should take over. “Remote Link Monitoring” took me some time to get working on the former Fortigate 60C’s, so I’m not discouraged yet.

Overall you can certainly see the power of what Fortinet’s re-branded “WAN Link Load-balancing” has to offer. The ability to leverage redundant Internet links in such a simple manner places some serious power in the hands of companies with limited IT resources – and I’m only scratching the surface of the capabilities.

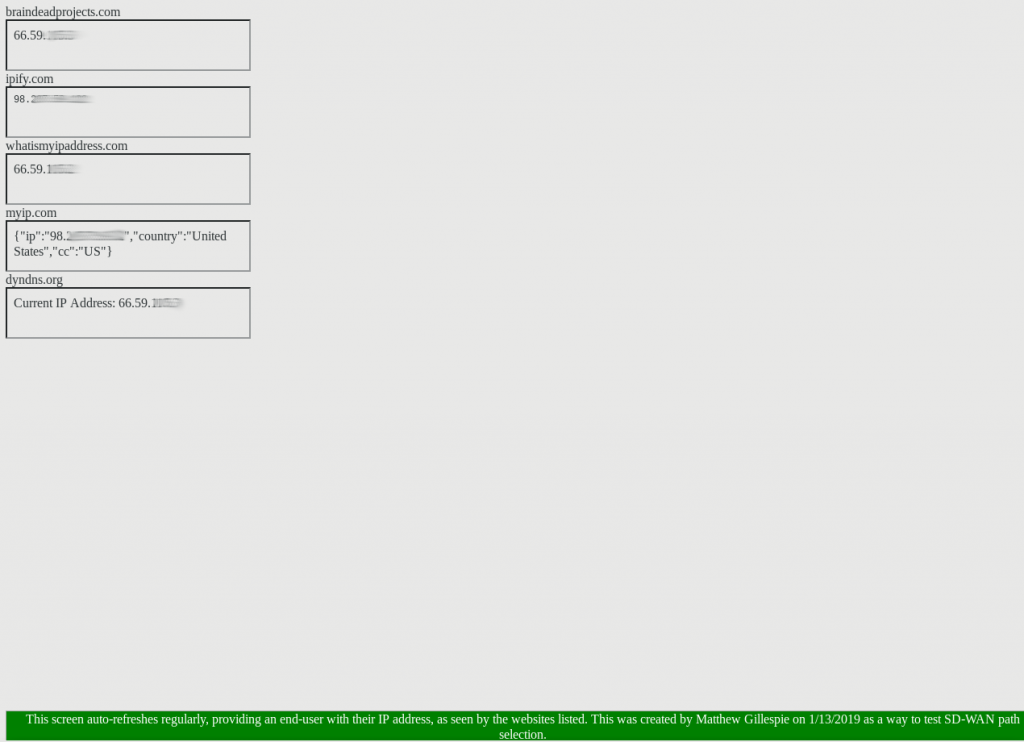

If you’re looking to test your own WAN load balancing, I’ve put together a webpage that will display your IP address, as seen from 5 different IP lookup sites on the Internet. Feel free to use it for testing. You can find it here.